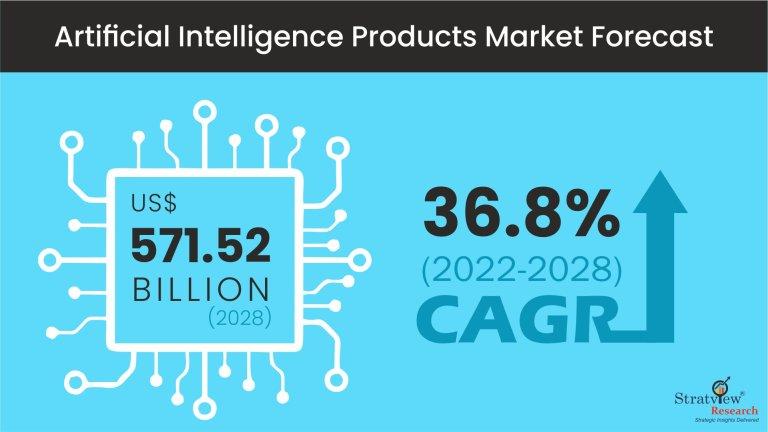

Artificial Intelligence Products Market Set to Experience Phenomenal Growth from 2022 to 2028

Artificial Intelligence Products Market, by Product Type (Hardware, Software, Services), Technology (Deep Learning, Machine Learning, Natural Language Processing, Machine Vision), End-Use (Healthcare, BFSI, Law, Retail, Advertising & Media, Automotive & Transportation, Agriculture, Manufacturing, Others) and Region (North America, Europe, Asia-Pacific, and the Rest of the World).

Navigating the regulatory landscape for AI products is becoming increasingly complex as the technology advances and its applications expand. Governments and regulatory bodies worldwide are grappling with the challenge of creating frameworks that ensure the safe and ethical use of AI while fostering innovation. Key areas of concern include data privacy, transparency, accountability, and bias in AI systems.

Data privacy is a primary focus, with regulations like the General Data Protection Regulation (GDPR) in the European Union setting stringent guidelines for how personal data can be collected, stored, and used by AI systems. These regulations require companies to ensure that their AI products are transparent about data handling practices and provide users with control over their personal information.

Another critical aspect is the ethical use of AI, particularly in mitigating bias and ensuring fairness. Regulatory bodies are pushing for AI systems to be transparent and explainable, allowing users and regulators to understand how decisions are made. This transparency is crucial for building trust and ensuring that AI does not perpetuate existing inequalities or introduce new forms of discrimination.

In conclusion, navigating the regulatory landscape for AI products requires companies to stay abreast of evolving laws and guidelines, prioritize ethical considerations, and ensure transparency in their AI systems. As regulations continue to evolve, companies must be proactive in compliance to ensure the responsible and innovative development of AI technologies.

- Art

- Causes

- Crafts

- Dance

- Drinks

- Film

- Fitness

- Food

- Games

- Gardening

- Health

- Home

- Literature

- Music

- Networking

- Other

- Party

- Religion

- Shopping

- Sports

- Theater

- Wellness